CMVS support in PhotoSynthToolkit:

First of all, I’m sorry this post is not about CMVS support in PhotoSynthToolkit ![]() . Releasing the PhotoSynthToolkit with CMVS support is way more complicated than predicted… This is because it is not just a file conversion process (as in my PhotoSynth2PMVS). I have designed a library (OpenSyntherLib) that extract features, match them, build tracks and then triangulate them using PhotoSynth cameras parameters. The problem is that this library is highly configurable to match each dataset needs. So providing an automatic solution with good parameters is difficult.

. Releasing the PhotoSynthToolkit with CMVS support is way more complicated than predicted… This is because it is not just a file conversion process (as in my PhotoSynth2PMVS). I have designed a library (OpenSyntherLib) that extract features, match them, build tracks and then triangulate them using PhotoSynth cameras parameters. The problem is that this library is highly configurable to match each dataset needs. So providing an automatic solution with good parameters is difficult.

The new PhotoSynth2CMVS tool generates bundler-compatible file (“bundle.out”). I’ve sent the bundle.out file of the V3D dataset to Olafur Haraldsson and he has managed to create a 36 million vertices point cloud with it! It will be showcased in a next post.

PhotoSynth WebGL Viewer:

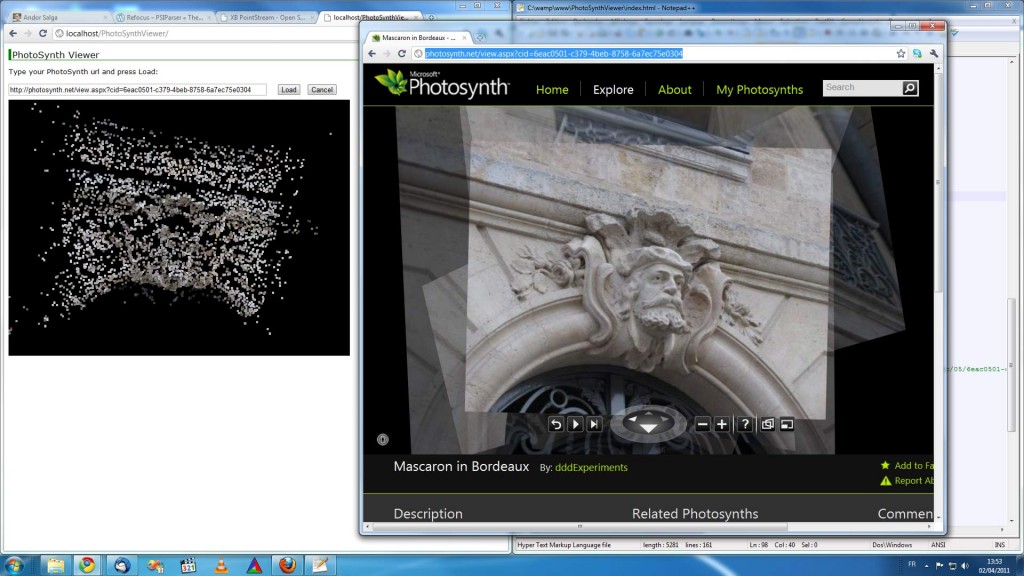

As soon as I’ve seen the SpiderGL presentation at the 3D-Arch Workshop I’ve decided to implement a PhotoSynth viewer with WebGL! Thanks to Cesar Andres Lopez I’ve found XB PointStream which is very well designed and documented. Implementing a PhotoSynth Parser turns out to be super easy! Porting my PhotoSynthParser.cpp took less than 10min thanks to the jDataView and binary ajax developed by Vjeux. After 2 hours of hacking I got this first viewer:

I can’t host the viewer on my website has it is using a proxy to fix ajax cross-domain security issue (thus it will kill my bandwidth limit). So I’ve made a video to show you how it looks:

The viewer source code will be hosted on my GitHub and I’m working on a Google Chrome extension to by-pass the need of a proxy… Having a Google Chrome extension that replace the Silverlight viewer on photosynth.net would be nice too (if you don’t have Silverlight).

Very Nice !

WebGL is promising and I hope such webgl viewer could be compatible with Bundler/CMVS output if it is able to load PLY point fil !e

This looks like the beginning of good things, Henri! Looking forward to seeing the output of Photosynth2CMVS.

Out of curiosity, does your WebGL viewer download as many chunks of the point cloud concurrently as the browser will allow or download them sequentially?

From what I can see with the fountain dataset, it appears that your viewer reads points into memory and begins displaying them as it receives them. Is this correct or does it wait until the entire point cloud is downloaded to display anything?

Am I right in thinking that so far your viewer only loads the primary point cloud for a synth?

Have you tested the viewer at all with Opera 11.5 for Windows? http://labs.opera.com/news/2011/02/28/

Do you have any plans for adding code to the viewer to read the deep zoom images and collections? This would really be a significant addition.

I believe that in geoaligned synths, not only is the lat-long present in the synth metadata, but also the orientation and scale information. Have you considered an option to display the point clouds (and hopefully one day photos) over a map?

Have you considered simultaneously loading all geoaligned synths into the viewer for popular subjects such as the Statue of Liberty? I would love to see this beginning to happen in the community, since so little news about it has come from the Photosynth team, even though they made it clear years ago that this was part of their plan.

I’m not sure what metadata external applications are allowed to write back to Photosynth via their web service, but if your viewer allowed us to load multiple synths from the same location over a satellite map, it would help to align all of them and then save out geoalignment data for all collections.

I would also love to be able to geoalign all coordinate systems in a synth, rather than only the primary point cloud.

If the Photosynth web service does not allow us to write geoalignment data back to their database, then I would love to see you or someone else in the community begin a Photosynth Geo-Alignment database where any of us can geoalign anyone’s synth (and ideally panorama eventually) to the map, regardless of whether the synth or pano author has taken the time to geo-tag and geo-align it themselves. I know that I, myself, have seen many synths without a geotag which I would have happily pinpointed and aligned. If others are like me, it seems like a waste to not begin taking advantage of people’s willingness to help and begin storing this knowledge in a central place that the WebGL viewer can access.

If Photosynth2CMVS gets to where you want it to be and someone in the community is willing to solve the hosting, I would love to see a database of dense reconstructions, each tagged with the Photosynth collection ID(s) of the source synth(s) from which they were derived and also notating which version of CMVS they were reconstructed with, what settings were used, and which user contributed the dense reconstruction (allowing contributers to link their ID to their various other photogrammetry IDs – i.e. their Photosynth username and PhotoCity username), then we have a shot at creating something very powerful.

I would love to see the Photosynth team themselves pursue all of the things I’ve listed above, but as long as they are not talking about it or giving any clear idea as to when we can expect these features to arrive, I would like to see talented people from the photogrammetry community begin putting it together as best we can.

The viewer described by Henri is in full use in http://www.3dtubeme.com a SFM social site created by Cesar Andres Lopez and Olafur Haraldsson

@Cesar, excellent! Looks like the hosting I mentioned could be solved by you guys.

@Pierre: Thanks! The original viewer can parse ply without trouble although there is no Parser available yet (but ASC format is very close to ply). So using this player with Bundler output should be pretty straightforward.

@Cesar Lopez: I’ve added a link to your website now that your project is public, wish you the best!

@Nate Lawrence: Ouch! This is a huge comment… Not sure that everybody is going to read that, IMO you should have sent me a mail instead… I’ll try to answer to your questions anyway:

The WebGL viewer is displaying the bin chunk of 5000 vertices sequentially, displaying them as soon as one (chunk) is fully downloaded.

You’re right; I’m only displaying the first coord system: this is because they are in different coord system! (and so displaying multiple coord system could be tricky: I mean not very meaningful)

The viewer is now working with Firefox4, Chrome10 and Opera 11.5!

FYI, I’ve checked the PhotoSynth WebService and find out that I should be able to produce a geo-referenced point cloud (with scale and rotation).

I definitely want to add deep zoom collection + multiple synth + geo-referenced map to my viewer but this is hard to keep motivated when you are alone working on different projects (and not been paid for that).

I also agree that a WebService that permit to produce dense meshes from PhotoSynth would be great… but I’m realist about the economic viability of this service: servers are expensive and you’ll have to pay SiftGPU/Bundler/CMVS/PMVS license if you want to charge people to get the models. And there are better algorithms out there that are faster and use GPU for dense reconstruction as well.

@Henri, sorry for the long comment (I didn’t plan it that way) and thank you, as always, for your hard work. It’s great to see someone taking time to care about Opera.

I assume that the viewer works in Webkit Nightlies as well because 3DTubeMe was recommending that as one of their three recommendation.

As far as displaying different point clouds, all I meant was loading them all so that I could switch between them if desired – in the same way as the official synth viewers. Of course, you are right – displaying them as their coordinates currently exist does not make sense, however I continue to desire to have an end user tool which allows me to position all of a synth’s coordinate systems relative to each other on a map so that they can be meaningfully viewed simultaneously.

As to building a webservice that stores user-contributed dense reconstructions of synths and geo-align information not provided by the authors, I’d like to see it done as a free service, not paid, but I know that there are strong challenges present in running such an operation. As I am not in a place to pursue that myself, I cannot criticise anyone else for not leaping at the opportunity either, but wanted to spell out some of my vision of things that need to be done but are being ignored in hopes that others share some of that vision who might be in a position to do something about it.

I’ll leave it by saying that I have also run a site as a free service before, funding it completely alone with both my own time and money and I understand how draining that can be. I am in the process of working off some debt, but when I am in a place that I can contribute (hopefully later this year), I will remember your Donate button.

Cheers!

N