The idea of this pose estimator is based on PTAM (Parallel Tracking and Mapping). PTAM is capable of tracking in an unknown environment thanks to the mapping done in parallel. But in fact if you want to augment reality, it’s generally because you already know what you are looking at. So, being able to have a tracking working in an unknown environment is not always needed. My idea was simple: instead of doing a mapping in parallel, why not using SFM in a pre-processing step ?

|

|

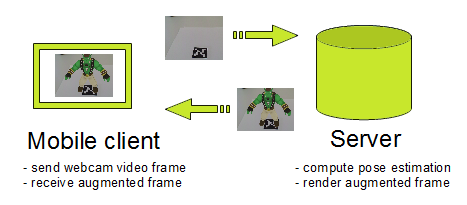

| input: point cloud + camera shot | output: position and orientation of the camera |

So my outdoor tracking algorithm will eventually work like this:

- pre-processing step

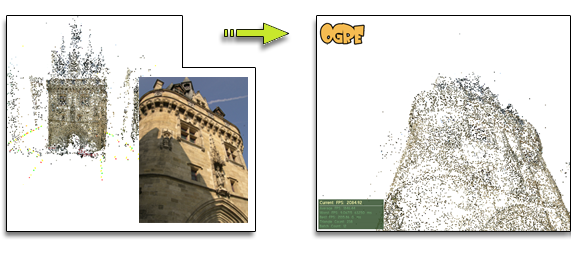

- generate a point cloud of the outdoor scene you want to track using Bundler

- create a binary file with a descriptor (Sift/Surf) per vertex of the point cloud

- in real-time, for each frame N:

- extract feature using FAST

- match feature from frame N-1 using 2D patch

- compute “relative pose” between frame N and N-1

- in almost real-time, for each “key frame”:

- extract feature and descriptor

- match descriptor with those of the point cloud

- generate 2D/3D correspondence from matches

- compute “absolute pose” using PnP solver (EPnP)

The tricky part is that absolute pose computation could last several “relative pose” estimation. So once you’ve got the absolute pose you’ll have to compensate the delay by cumulating the previous relative pose…

This is what I’ve got so far:

- pre-processing step: binary file generated using SiftGPU (planning to move on my GPUSurf implementation) and Bundler (planning to move on Insight3D or implement it myself using sba)

- relative pose: I don’t have an implementation of the relative pose estimator

- absolute pose: it’s basically working but needs some improvements:

- switch feature extraction/matching from Sift to Surf

- remove unused descriptors to speed-up maching step (by scoring descriptors used as inlier with training data)

- use another PnP solver (or add ransac to support outliers and have more accurate results)