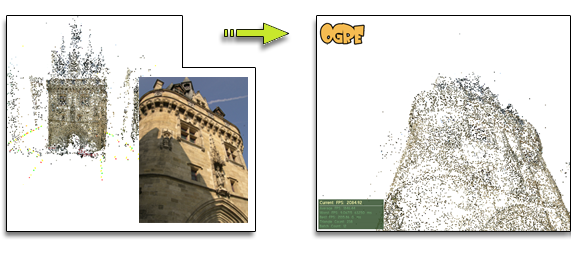

I’ve spent 2 days implementing a WebService in C++. I’ve lost 1 day trying to use WebSocket (this wasn’t a good idea as Firefox and Google Chrome were not implementing the same version). I’ve also lost a lot of time with the beautiful drag and drop API… who is responsible for this mess? Anyway I’ve managed to create this cool demo:

The principle is very simple: the user drag and drop an unknown picture on the webpage. The picture is resized on the client side using canvas and then sent to the webservice using a classic POST Ajax call with the picture sent in jpeg base64. Then the pose estimator web service try to compute the pose and send an xml response. Then the pose is applied to the Three.js camera used in my PhotoSynth viewer.

I have cheated a little as I’ve used shots of a video with known intrinsic parameters. But I’ve two options to handle really unknown pictures:

- If the jpeg as Exif I can use it to find the focal (using a CCD database and a JS Exif parser)

- Otherwise I am planning to implement this in C++

I hope you enjoy this demo as much as I do and that Google and Microsoft are working in that direction too (especially Microsoft with Read/Write World…)