I’ve worked a lot on OpenSynther lately: OpenSynther is the name of my structure-from-motion solution. This new version is a major rewrite of the previous version which was using Surf with both GPU and multi-core CPU matching. The new version is using SiftGPU and Flann to achieve linear matching complexity of unstructured input as described in Samantha paper. You can find more information about OpenSynther features on it dedicated page (including source code).

OpenSynther has been designed as a library (OpenSyntherLib) which has already proven to be useful for several programs written by myself:

- OpenSynther: work in progress… used by my augmented reality demo

- PhotoSynth2CMVS: this allow to use CMVS with PhotoSynthToolkit

- BundlerMatcher: this is the matching solution used by SFMToolkit

Outdoor augmented reality demo using OpenSynther

I’ve improved my first attempt of outdoor augmented reality: I’m now relying on PhotoSynth capability of creating a point cloud of the scene instead of Bundler. Then I’m doing some processing with OpenSynther and here is what what you get:

You can also take a look at the 3 others youtube videos showing this tracking in action around this church: MVI_6380.avi, MVI_6381.avi, MVI_6382.avi.

PhotoSynth2CMVS

This is not ready yet, I still have some stuff to fix before releasing it. But I’m already producing a valid “bundle.out” file compatible with CMVS processing from PhotoSynth. I’ve processed the V3D dataset with PhotoSynth2CMVS and sent the bundle.out file to Olafur Haraldsson who has managed to create the corresponding 36 million vertices point cloud using CMVS and PMVS2:

The V3D dataset was created by Christopher Zach.

BundlerMatcher

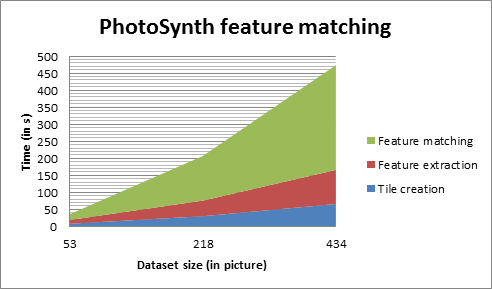

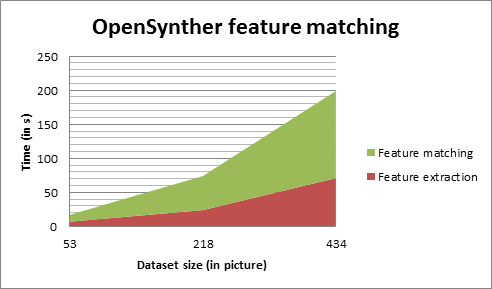

The new unstructured linear matching is really fast as you can see on the above chart compared to PhotoSynth. But the quality of the generated point cloud is not as good as PhotoSynth.

This benchmark was computed on a Core i7 with an Nvidia 470 GTX. I’ve also compared the quality of the matching methods implemented in OpenSynther (linear VS quadratic). I’ve used Bundler as a comparator with a dataset of 245 pictures:

| Linear | Quadratic | |

| Nb pictures registered | 193 | 243 |

| Time spent to register 193 pictures | 33min | 1h43min |

On the one hand, both the matching and the bundle adjustment are faster with linear matching but on the other hand, having only 193 out of 245 pictures registered is not acceptable. I have some idea on how to improve the linear matching pictures registering ratio but this is not implemented yet (this is why PhotoSynth2CMVS is not released for now).

Future

I’ve been playing with LDAHash last week and I’d like to support this in OpenSynther to improve matching speed and accuracy. It would also help to reduce the memory used by OpenSynther (by a factor 16: 128 floats -> 256bits per feature). I’m also wondering if the Cuda knn implementation could speed-up the matching (if applicable)? I ‘d also like to restore the previous Surf version of OpenSynther which was really fun to implement. Adding a sequential bundle adjustment (as in bundler) would be really interesting too…

Off-topic

I’ve made some modifications to my blog: switched to WordPress 3.x, activated page caching, added social sharing buttons and added my LinkedIn account next to the donate button…