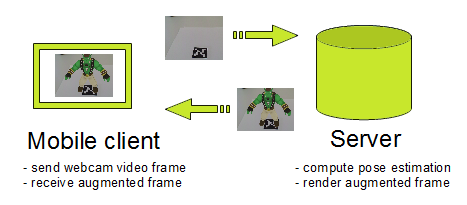

I have created a new augmented reality prototype (5 days experiments). It is using a client/server approach based on Boost.Asio. The first assumption of this prototype is that you’ve got a mobile client not so powerful and a powerful server with a decent GPU.

| So the idea is simple: the client uploads a video frame and the server does the pose estimation and send back the augmented rendering to the client. My first prototype is using ArToolKitPlus in almost real-time (15fps) but I’m also working on a markerless version that would be less interactive (< 1fps). The mobile client was an UMPC (Samsung Q1). |

|

Thanks to Boost.Asio I’ve been able to produce a strong client/server very quickly. Then I have created two implementations of PoseEstimator :

class PoseEstimator

{

public:

bool computePose(const Ogre::PixelBox& videoFrame);

Ogre::Vector3 getPosition() const;

Ogre::Quaternion getOrientation() const;

}

- ArToolKitPoseEstimator (using ArToolKitPlus to get pose estimation)

- SfMPoseEstimator (using EPnP and a point cloud generated with Bundler -Structure from Motion tool- to get pose estimation)

ArToolKitPoseEstimator

There is nothing fancy about this pose estimator, I’ve just implemented this one as proof of concept and to check my server performance. In fact, ArToolKit pose estimation is not expensive and can run in real-time on a mobile.

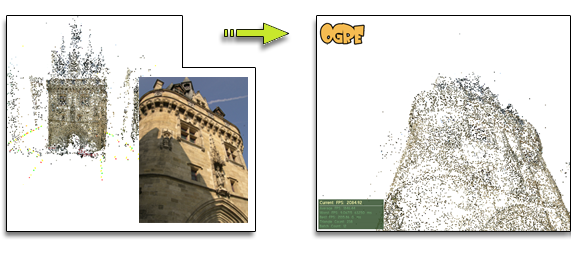

SfMPoseEstimator

I’ll just introduce the concept of this pose estimator in this post. So the idea is simple, in augmented reality fake rolex you generally know the object you are looking at because you want to augment it. The idea was to create a point cloud of the object you want to augment (using Structure from Motion) and keep the link between the 3D points and theirs 2D descriptors. Thus when you take a shot of the scene you can compare the 2D descriptors of your shot with those of the point cloud and so create 2D/3D correspondence. Then the pose estimation can be estimated by solving the Perspective-n-Point camera calibration problem (using EPnP for example).

Performance

The server is very basic, it doesn’t handle client queuing yet (1 client = 1 thread), but it already does the off-screen rendering and send back the texture in raw RGB.

The version using ArToolKit is only Replica Handbag running at 15fps because I had trouble with the jpeg compression so I turn it off. So this version is only bandwidth limited. I didn’t investigate this issue that much because I know that the SfMPoseEstimator is going to be limited by the matching step. Furthermore I’m not sure that it’s a good idea to send highly compressed image to the server (compression artifact can add extra features).

My SfMPoseEstimator is also working but it’s very expensive (~1s using the GPU) and it’s not always accurate due to some flaws of my original implementation. I’ll explain how it works in my following post.

![]() )

)