Happy new year everyone!

2010 was a year full of visual experiments for me, I hope that you like what you see on this blog. In this post I’m making a little overview of all visual experiments created by me during this year. This is an opportunity to catch-up something you’ve missed! I’d like also to thanks some person that have been helping me too:

- Olafur Haraldsson: for creating the photogrammetry forum

- Josh Harle: for his videos tutorials and his nice blog

- You: for reading this

Visual experiments created in 2010:

During this year I have added some features to Ogre3D:

- ArToolKitPlus: augmented reality marker-based system

- Cuda: for beginner only (at least advanced user could grab some useful code)

- OpenCL: for beginner only (at least advanced user could grab some useful code)

- Html5 Canvas: implementation based on skia for graphics and V8 for javascript scripting

- Kinect: this is a very hacky solution, I’ll improve it later

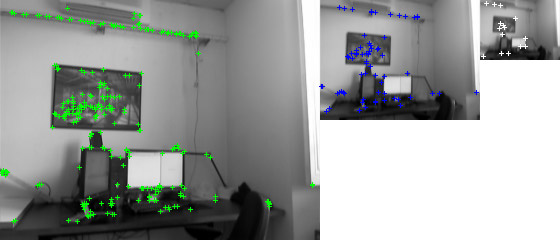

I also have learned GPGPU programming by myself while coding a partial GPUSurf implementation based on Nico Cornelis paper. But this implementation is not complete and I’m willing to rewrite it with a GPGPU framework based on OpenGL and CG only (not Ogre3D). With such a framework writing Sift/Surf detector should be easier and more efficient.

I have created some visual experiments related to Augmented Reality:

- Remote AR prototype

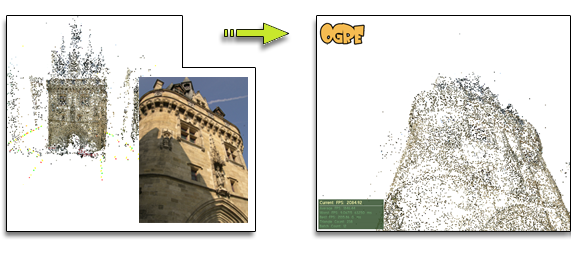

- Outdoor 3D tracking using point cloud generated by structure from motion software

- Outdoor 2D tracking using panorama image

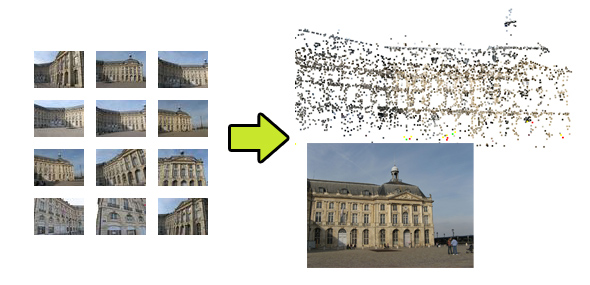

My outdoor 3D tracking algorithm for augmented reality needs an accurate point cloud: this is why I’m interested in structure from motion and I’ve created two SfM toolkit:

- SFMToolkit (SiftGPU -> Bundler -> CMVS -> PMVS2)

- PhotoSynthToolkit (PhotoSynth -> PMVS2)

Posts published in 2010:

- 2010/12/22: Outdoor tracking using panoramic image

- 2010/12/20: Structure from motion projects

- 2010/12/13: Augmented Reality outdoor tracking becoming reality

- 2010/11/20: Kinect experiment with Ogre3D

- 2010/11/19: PhotoSynthToolkit results

- 2010/11/09: PhotoSynth Toolkit updated

- 2010/11/05: Structure From Motion Toolkit released

- 2010/09/27: My 5 years old Quiksee competitor

- 2010/09/23: PMVS2 x64 and videos tutorials

- 2010/09/08: Introducing OpenSynther

- 2010/08/22: Dense point cloud created with PhotoSynth and PMVS2

- 2010/08/19: My PhotoSynth ToolKit

- 2010/07/12: Pose Estimation using SfM point cloud

- 2010/07/11: Remote Augmented Reality Prototype

- 2010/07/08: Structure From Motion Experiment

- 2010/06/25: GPU-Surf video demo

- 2010/06/23: GPUSurf and Ogre::GPGPU

- 2010/06/20: Ogre::Canvas, a 2D API for Ogre3D

- 2010/05/09: Ogre::OpenCL and Ogre::Canvas

- 2010/04/26: Cuda integration with Ogre3D

- 2010/04/09: Multitouch prototype done using Awesomium and Ogre3D

- 2010/03/05: ArToolKitPlus integration with Ogre3D

- 2010/02/20: Hello World !