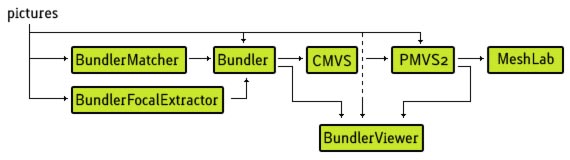

This viewer is now integrated with the new version of my PhotoSynthToolkit (v5). This toolkit allow you to download synth point cloud and thumbnails pictures. You can also densify the sparse point cloud generated by PhotoSynth using PMVS2 and then create great accurate mesh using MeshLab.

New feature of PhotoSynthToolkit v5:

- Thumbnails downloading should be faster (8x)

- New C++ HD picture downloader (download tiles and re-compose them)

- Tools to generate “vis.dat” from previous PMVS2 call (analysing .patch file)

- Working Ogre3D PhotoSynth viewer:

- Can read dense point cloud created with my PhotoSynthToolkit using PMVS2

- Click on a picture to change camera viewpoint

- No-roll camera system

Warning: the PhotoSynth viewer may need a very powerful GPU (depending on the synth complexity: point cloud size and number of thumbnails). I’ve currently tested a scene with 820 pictures and 900k vertices on a Nvidia 8800 GTX with 768mo and it was working at 25fps (75fps with a 470 GTX and 1280mo). I wish I could have used Microsoft Seadragon ![]() .

.

Download:

The PhotoSynthToolkit v5 is available on his dedicated page, please do not make direct link to the zip file but to this page instead. So people willing to download the toolkit will always get the latest version.

Video demo:

Future version

Josh Harle has created CameraExport: a solution for 3DS Max that enable to render the picture of the Synth using camera projection. I don’t have tested it yet but I’ll try to generate a file compatible with his 3DS Max script directly from my toolkit, thus avoiding to download the Synth again using a modified version of SynthExport. Josh has also created a very interesting tutorial on how to use mask with PMVS2:

Masks with the PhotoSynth Toolkit 4 – tutorial from Josh Harle on Vimeo.