In my previous post I have introduced my PhotoSynth ToolKit. The source code is available on my google code under MIT license, you can download it right now : PhotoSynthToolKit2.zip. I have created a video to show you what I’ve managed to do with it:

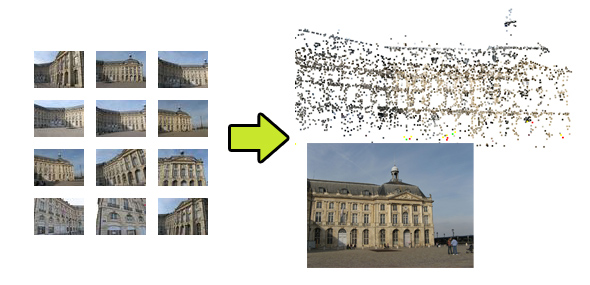

As you can see in this video I have managed to use PMVS2 with PhotoSynth output.

All the synths used in this video are available on my PhotoSynth account or directly:

- Porte Cailhau: http://photosynth.net/view.aspx?cid=1e509490-5657-453d-a2f6-2e55d14ae512

- Goutz: http://photosynth.net/view.aspx?cid=1471c7c7-da12-4859-9289-a2e6d2129319

- Place de la Bourse: http://photosynth.net/view.aspx?cid=e82eca65-60fe-498b-8916-80d1e3245640

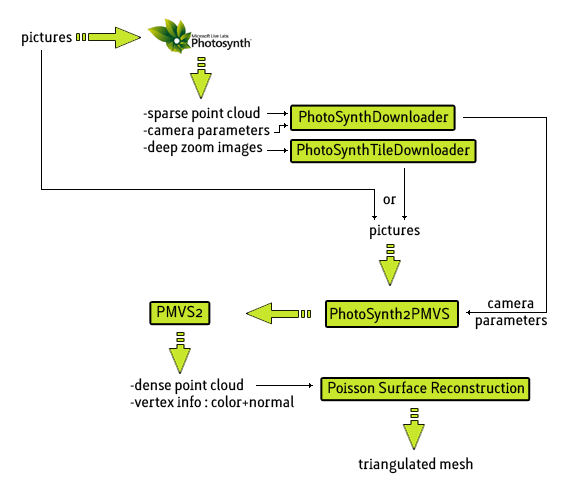

Workflow

My PhotoSynth ToolKit is composed of 3 programs:

- PhotoSynthDownloader: download 0.json + bin files + thumbs

- PhotoSynth2PMVS: undistort bunch of pictures and write CONTOUR files needed for PMVS2

- PhotoSynthTileDownloader [optional]: download all pictures of a synth in HD (not relased yet for legal reason, but you can watch a preview video)

Limitations

It seems that my first version doesn’t handle the JSON parsing of all kind of synth very well, I’ll try to post a new version asap. fixed in PhotoSynthToolKit2.zip

PMVS2 for windows is a 32bit applications, so it has a 2Gb memory limits (3Gb if you start windows with the /3Gb options + compile the app with custom flag ?). I haven’t tried yet the 64bit linux version but I have managed to compile a 64bit version of PMVS2. My 64bit version manage to use more than 4Gb of memory for picture loading, but it crashes right after the end of all picture loading. I didn’t investigate that much, it should be my fault too, compiling the dependencies (gsl, pthread, jpeg) wasn’t an easy task.

Anyway, PMVS2 should be used with CMVS but I’m not sure that I can extract enough information from PhotoSynth. Indeed Bundler output is more verbose, you have 2d/3d correspondence + number of matches per images. I think that I can create a vis.dat file using some information stored in the JSON file but it should only speed-up the process, so it doesn’t help that much with the 2Gb limits.

Credits

My PhotoSynth ToolKit is coded in C++ and the source code is available on my google code (MIT license). It is using:

- Boost.Asio: network request for Soap + file download

- TinyXml: parsing of soap request

- JSON Spirit: parsing of PhotoSynth file: “0.json”

- jpeg: read/write jpeg for radial undistort

Furthermore, part of the code are based on:

- Bundler: RadialUndistort + Bundler2PMVS

- SynthExport: C# binary loader

Please go to the PhotoSynthToolkit page to get the latest version