Bad idea: hacking during the new year holiday and during the super bowl go Seahawks! Result? A very hacky google chrome extension which is adding several alpha/beta quality features to the photosynth.net website. This is my gift for 2014, enjoy! ![]()

You can get the extension here. Make sure you are logged in on photosynth.net (and have joined the beta) while using the extension as some photosynth2 feature are still only available to beta users (hopefully this limitation will be removed soon).

Feature added by the extension:

The extension is changing the top menu to:

![]()

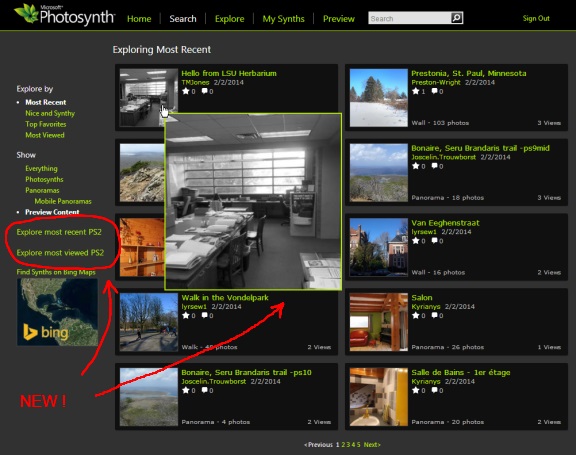

- Search: previous Explore page + new links to fullscreen grid based ps2 explore page.

- Explore: new fullscreen map based explore page.

- My Synths: previous My Photosynths page + new tab (my map, my ps2, my info).

- Preview: link to the new preview website + animated preview on the create page.

Search:

Animated preview when you hover a thumbnail of a PS2 + links to new explore page.

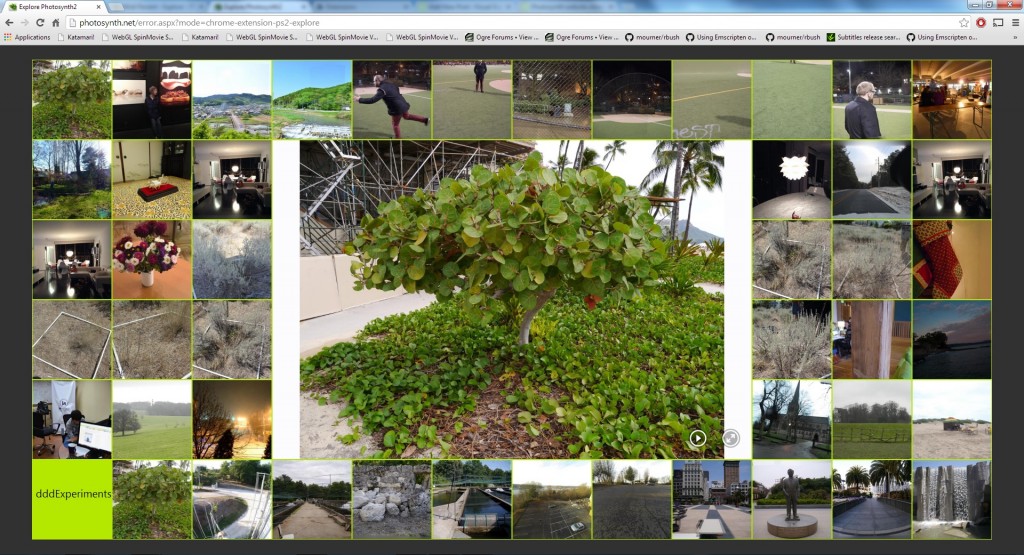

Clicking on “Explore most recent PS2″ or “Explore most viewed PS2″ will display a page like this:

The bottom row contains the latest synths of the current user (the one of the current synth being played). You can hover to see a preview and click to load a synth. Experimental feature: it’s using a new version of the viewer capable of quickly unload/load synths (don’t click too fast ![]() ).

).

Explore:

New fullscreen page showing synths (ps1, panorama and ps2) on a map. Sadly there is no proper ranking here so you need to zoom in a lot before being able to see synths. Also there is a known bug in google chrome which is preventing from seeing silverlight content opened in new tab.

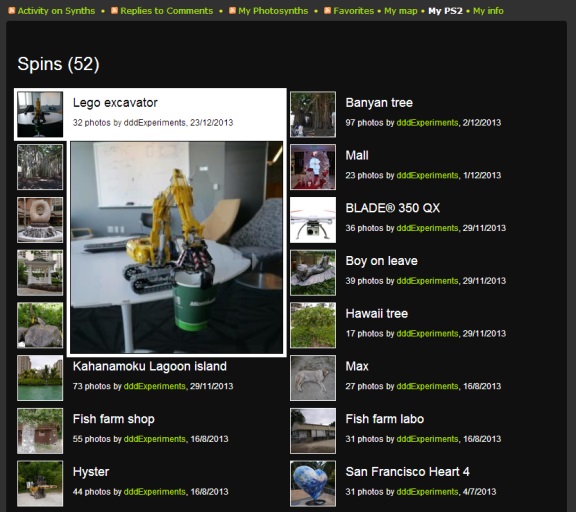

My Synths:

The extension add 3 new tabs: My map, My PS2, My info

![]()

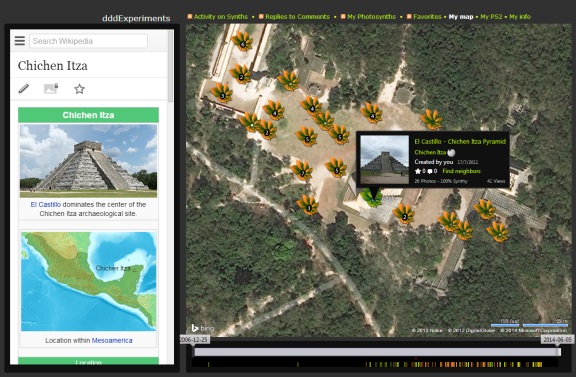

My map

This page is really complicated, adding a lot of feature… I will propably have another post explaining how to use it.

Basically you can map (=geotag) your own synths (ps1, panorama, ps2). You need to be logged in and click on ‘show untagged synths’ then you can search for a place on the map, move the orange pushpin or right click to reassign it location. Then once the orange pushpin is at the place where you’ve captured your synth, you can click on ‘assign pushpin location’ to assign the location to the corresponding synth. To select synths, you can press and hold ‘ctrl’ and then draw a rectangle, from that selection you can either remove their map position or assign them a text tag. Clicking on ‘movable pushpins’ will allow you to directly move your synth pushpins on the map. You can click on on ‘Find neighbors’ to find synths arround your synth (orange synth = community, green = yours). You can also play with the timeline to only display synths captured in the corresponding time interval. You can also change the url and switch w=0 to w=1 to enable the wikipedia option. This option will search for the closest wikipedia of your synth. Please consider donating to WikiLocation if you are using the w=1 option.

My PS2

This is a new page allowing you to quickly preview all your ps2 synths. They are grouped by topology (spin, panorama, wall, walk) and then sorted by captured date.

My info

Sadly the captured date information is not properly filled by the system (it’s using the upload date). You need to click on ‘fix capturedDate’ to set the captured date of all your ps2 (it might take a while: wait for the ‘done’ alert box).

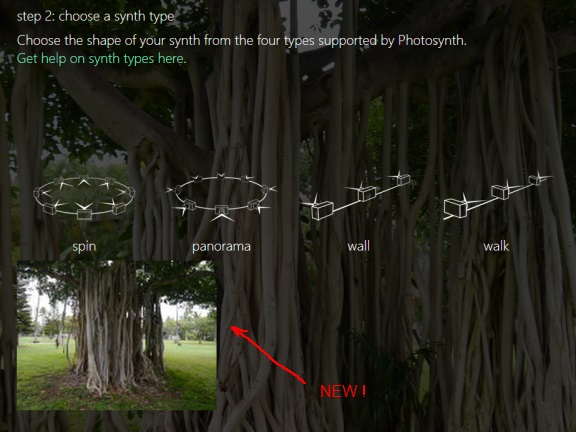

Preview – Create

The extension is adding an animated preview which will help you to choose the proper topology:

Comments

This extension was created by me only and thus it doesn’t mean that this is representative of upcoming photosynth feature.

FYI this is not my first extension for the photosynth website, I’ve already created one which is adding a webgl fallback viewer if you don’t have silverlight for photosynth1 content.

Have fun exploring all feature introduced in this chrome extension!