I’ve recently discover the new transferable object feature in Google chrome. BTW transferable objects are really great! Furthermore you really should use them as I’ve found that structure cloning is leaking (you can try this leaking demo with chrome 17). Thus I’ve started to update my extension and done a benchmark of various solution with a big synth of 20mo (1200k vertices and 1000 pictures).

I’ve also improved a lot my binary parsing by using only native binary parsing (DataView). One thing about DataView: did they realize that if you forgot the endianness parameter it will use BigEndian? (littleEndian = x86, bigEndian = Motora 68k).

Thanks to both optimization the new version is 4x faster! (loading time: 24s > 6s)

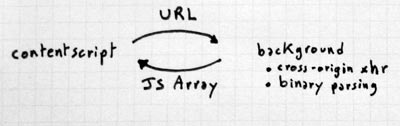

solution 1

- cross-orign was forbidden in contentscript (fixed now since chrome 13)

- lack of TypedArray copy/transfert -> JS array replacement

- Loading time: 13600ms (24400ms with the previous version without optimized binary parsing)

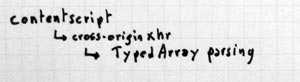

solution 2

- Issue: the UI is frozen during parsing (lack of threading)

- Loading time: 6500ms

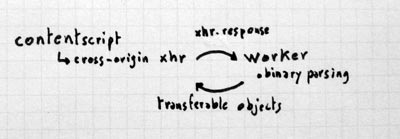

solution 3

- I’m using transferable objects to both send the xhr.response (ArrayBuffer) and receive the parsed Float32Array (vertex positions) and Uint8Array (vertex colors).

- Issue: I didn’t manage to spawn a worker from my extension directly (SECURITY_ERR: DOM Exception 18): I’ve been forced to inline the worker as a workaround.

- Loading time: 6000ms

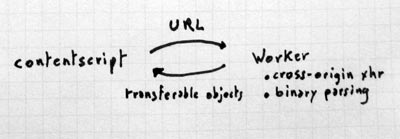

solution 4

- Issue: because of this bug workers can not do cross-orign xhr.

- Loading time: N/A

I’ve also optimized the PLY file generation by generating binary file on the client side instead of ascii.

- Ascii: 74mo in 9000ms

- Binary: 17mo in 530ms (~17x faster!)

Sadly I didn’t manage to find a way to accelerate “bundle.out” ascii file generation yet (involving lot of string concatenation and numbers formatting).

I didn’t have updated the extension on the chrome web store yet as I’m working on using the new BufferGeometry of Three.js that should be more efficient. Building BufferGeometry directly from Float32Array (vertex positions array) and Uint8Array (vertex colors array) seems a really better solution IMO.

Mesh compression

I’ve also discover the webgl-loader mesh compression solution recently. I’ve compiled my own version of objcompress.cc and fixed the JSON export. Then I’ve created my own format allowing to pack in one file all the utf8 files (thus reducing the number of http request needed). I will post about it soon: stay tuned!

Firefox extension?

On a side note I’ve also been playing with the new mozilla add-on builder SDK… well IMO Firefox isn’t the best browser for extension anymore. I’ve been very disappointed by the restriction applied to code running in the contentscript (for example prototype.js is not working: I had to use a clone that I’ve built long time ago). And I’m also very concerned about their security strategy: they don’t have a cross-orign xhr permissions field in the manifest. Thus extensions can do cross-orign request to all domains by default! Nevertheless I’ve managed to port the binary parsing of the point cloud but Three.js is not working either ![]() .

.

> Sadly I didn’t manage to find a way to accelerate

> “bundle.out” ascii file generation yet (involving lot of

> string concatenation and numbers formatting).

Have you tried postponing the concatenation? Just push the data into an array first (these can be pre-formatted substrings, e.g. an array element for each camera, or for each line). When done, just use the join function of the array.

Ex:

var a = ["this","is","an","example"];

var s = a.join(” “); // or a.join(“\n”) for newlines

This should be a lot faster, because it only needs one concat (creating the joined string) instead of creating a newly concatenated string every time, copying the old string data plus the newly added sub string…

@Bart: thanks for the tip but in fact this is an old optimization that is no longer valid (js engine have optimized string concat now: you can check this jsperf for example). I have described the solution I end up with in my next post. The big issue was in fact the memory usage and the high number of temporary object created and garbage collected. So writing directly in a binary array was the only way to keep memory usage low (AFAIK).