This is just a small post to show you want kind of results you can get with my PhotoSynthToolkit:

Download location and source code introduced in my previous post.

This is just a small post to show you want kind of results you can get with my PhotoSynthToolkit:

Download location and source code introduced in my previous post.

I have updated my PhotoSynth toolkit for easier usage (the same way as SFMToolkit). This is an example of dense mesh creation from 12 pictures using this toolkit:

The 12 pictures were shot with a Canon PowerShot A700:

Thanks to this toolkit, PMVS2 and MeshLab you can create a dense mesh from these 12 pictures:

triangulated mesh with vertex color -> triangulated mesh with vertex color and SSAO -> triangulated mesh shaded with SSAO -> triangulated mesh wireframe -> photosynth sparse point cloud

(sparse point cloud : 8600 vertices, dense point cloud: 417k vertices, mesh: 917k triangles)

You can also take a loot at the PhotoSynth reconstruction of the sculpture.

PhotoSynthToolkit is composed of several programs:

The source code is available under MIT license on my github. I have also released a win32 binary version with windows scripting (WSH) for easier usage: PhotoSynthToolkit4.zip.

If you need some help or just want to discuss about photogrammetry, please join the photogrammetry forum created by Olafur Haraldsson. You may also be interested by Josh Harle’s video tutorials, they are partially out-dated due to the new PhotoSynthToolkit version but these videos are very good to learn how to use MeshLab.

Please go to the PhotoSynthToolkit page to get the latest version

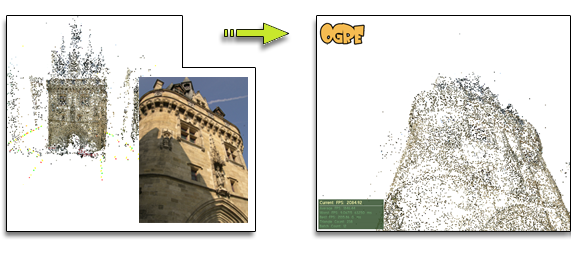

I have finally released my Structure-From-Motion Toolkit (SFMToolkit). So what can you do with it ? Let’s say you have a nice place like the one just bellow:

|

| Place de la Bourse, Bordeaux, FRANCE (picture from Bing) |

Well, now you can take a lot of pictures of the place (around 50 in my case):

And then compute structure from motion and get a sparse point cloud using Bundler:

Finally you have a dense point cloud divided in cluster by CMVS and computed by PMVS2:

You can also take a loot at the PhotoSynth reconstruction of the place with 53 pictures and 26 (without the fountain).

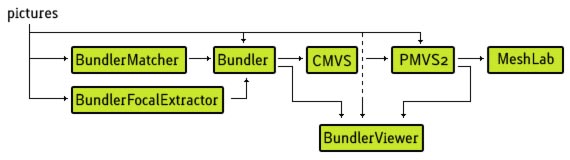

This is the SFMToolkit workflow:

SFMToolkit is composed of several programs:

As you can see this “toolkit” is composed of several open-source component. This is why I have decided to open-source my part of the job too. You can download the source code from the SFMToolkit github. You can also download a pre-compiled x64 version of the toolkit with windows scripting (WSH) for easier usage (but not cross-platform): SFMToolkit1.zip.

If you need some help or just want to discuss about photogrammetry, please join the photogrammetry forum created by Olafur Haraldsson. You may also be interested by Josh Harle’s video tutorials, they are partially out-dated due to the new SFMToolkit but these videos are very good to learn how to use MeshLab.

I have released a ToolKit for PhotoSynth that permit to create a dense point cloud using PMVS2.

You can download PhotoSynthToolKit1.zip and take a look at the code on my google code.

|

|

| PhotoSynth sparse point-cloud 11k vertices |

PMVS2 dense point-cloud 230k vertices |

I also have created a web app : PhotoSynthTileDownloader that permit to download all pictures of a synth in HD. I didn’t have release it yet because I’m concerned about the legal issue, but you can see that it’s already working by yourself:

I’ll give more information about it in a few day, stay tuned !

Edit: I have removed the worflow graph and moved it on my next post.

Please go to the PhotoSynthToolkit page to get the latest version

The idea of this pose estimator is based on PTAM (Parallel Tracking and Mapping). PTAM is capable of tracking in an unknown environment thanks to the mapping done in parallel. But in fact if you want to augment reality, it’s generally because you already know what you are looking at. So, being able to have a tracking working in an unknown environment is not always needed. My idea was simple: instead of doing a mapping in parallel, why not using SFM in a pre-processing step ?

|

|

| input: point cloud + camera shot | output: position and orientation of the camera |

So my outdoor tracking algorithm will eventually work like this:

The tricky part is that absolute pose computation could last several “relative pose” estimation. So once you’ve got the absolute pose you’ll have to compensate the delay by cumulating the previous relative pose…

This is what I’ve got so far: